Introducing the Model Context Protocol (MCP)

Mike Adams

Introducing the Model Context Protocol: Why it matters for product development

We’re still in the early days of building practical applications on top of Large Language Models (LLMs) like OpenAI’s ChatGPT, Google’s Gemini, or Anthropic’s Claude. But one thing is already clear: LLMs alone aren’t enough. To move beyond simple question-and-answer interactions, they need three things:

- Prompts – carefully designed instructions or templates for repetitive tasks.

- Resources – documents, databases, or other sources of context.

- Tools – APIs and system calls that let the model act in the real world, safely.

The Model itself (the LLM) is the engine behind all of this.

The Model Context Protocol (MCP), an open standard created by Anthropic, provides a framework for all of this. Think of MCP as a common language that lets different AI components talk to each other — defining what prompts, resources, tools, and models are, and how applications can combine them.

Why MCP matters

Some people have called MCP the “USB for AI.” The metaphor isn’t perfect, but the spirit is right: MCP aims to become a universal, plug-and-play standard for connecting AI systems and their context.

If widely adopted, it could:

- Reduce the friction of integrating APIs and resources (today, every AI product reinvents this).

- Make applications more reliable and transparent in how they use context.

- Accelerate innovation by letting developers focus on design, not plumbing.

How MCP works

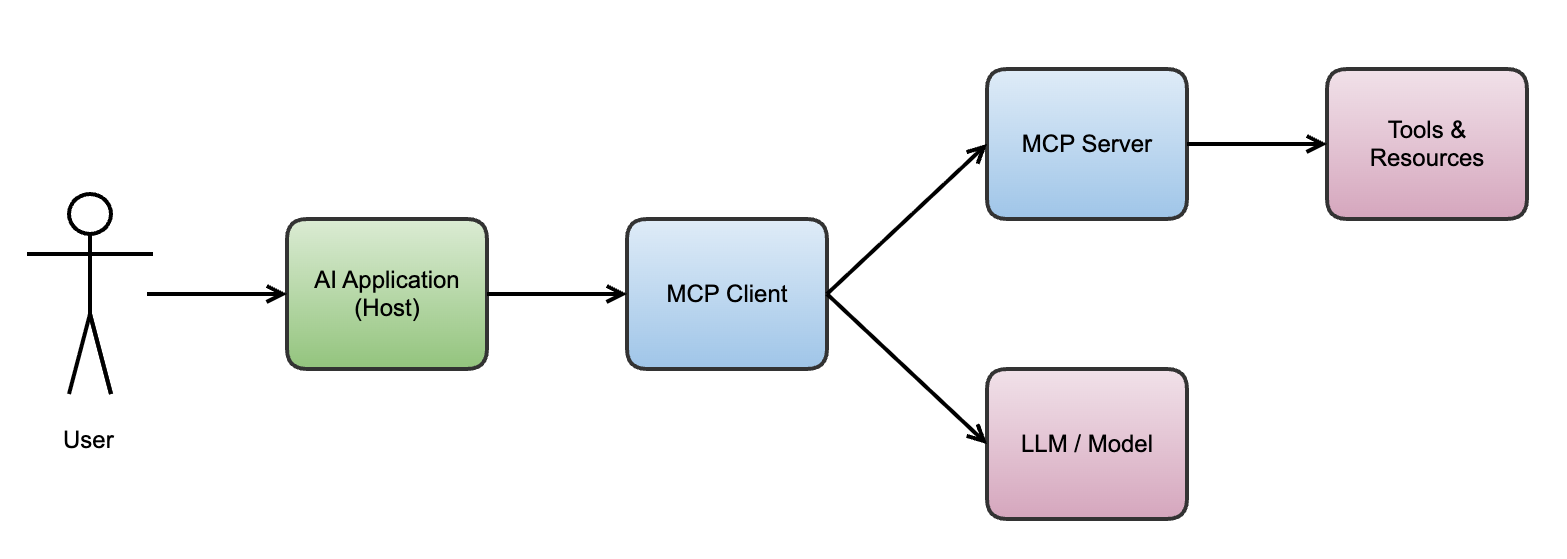

MCP uses a client-server model — much like the web:

- MCP Clients run inside an AI application (or AI agent) and connect to one or more LLMs.

- MCP Servers provide prompts, resources, or tools — effectively serving the context.

Users interact with the application, which mediates between them, the model, and the context. This separation makes MCP flexible: multiple clients can connect to multiple servers, and servers can even request LLM completions through clients without running their own models.

Simplified view of the MCP architecture

Simplified view of the MCP architecture

A walkthrough example: travel booking

Imagine you ask an AI application to help you book a trip. With MCP under the hood, here’s how it might play out:

- The AI app offers “Book a trip” as a pre-built task (via prompt templates).

- You provide parameters: location, dates, budget.

- The MCP client fetches real-time resources (flights, hotels, weather).

- The LLM evaluates options and recommends itineraries.

- You review and refine until happy.

- With permission, the app books your travel and updates your calendar.

This example illustrates MCP’s strengths: reusable prompt libraries, real-time resource fetching, clear user control, and real-world actions through tools.

Under the hood: protocol details

For developers, MCP is reassuringly familiar. It’s built on:

- JSON-RPC over HTTP (or STDIO for local resources).

- Well-defined message exchanges.

- SDKs in multiple languages for faster implementation.

Here’s a simplified example of a client requesting weather data:

Client request

{

"jsonrpc": "2.0",

"id": 3,

"method": "tools/call",

"params": {

"name": "weather_current",

"arguments": { "location": "San Francisco", "units": "imperial" }

}

}

Server response

{

"jsonrpc": "2.0",

"id": 3,

"result": {

"content": [

{ "type": "text",

"text": "Current weather in San Francisco: 68°F, partly cloudy, winds W 8 mph, humidity 65%" }

]

}

}

Who’s using MCP?

MCP was announced in late 2024. Since then, adoption has been growing rapidly:

- Anthropic has published open-source SDKs in Python, TypeScript, C#, and more.

- Developers are experimenting with MCP servers for common tasks (search, calendars, weather, databases).

- Ecosystem momentum is building: early adopters are describing MCP as a foundational layer for AI applications.

The push to adopt MCP comes at a time when investment is pouring into AI like never before. According to LinkedIn News, non-AI startups are struggling to raise funds, while AI companies attract record capital. This “AI gold rush” means developers are racing to be first with new solutions — and standards like MCP make that possible at scale.

Final thoughts

Standards shape ecosystems. Just as HTTP enabled the web and USB enabled hardware, MCP could enable the next generation of AI applications by making LLMs more usable, safe, and interoperable.

We’re still at the start of this journey — but if you’re a developer, product manager, or entrepreneur working with AI, MCP is worth paying attention to.

Further reading

If you’d like to share your own experiences or thoughts, feel free to connect with me on LinkedIn or email me at mike@myglassesaredirty.com.